Have you ever felt like you’re talking to a brick wall when using AI tools? You ask a simple question, and instead of a clear answer, you get a rambling mess or something completely off-base. It’s frustrating, right? That’s the everyday reality for many people diving into generative AI, but there’s a skill that can turn those headaches into triumphs: prompt engineering. In this deep dive, we’ll unpack what prompt engineering really means in the world of AI, especially as we head into 2025. Think of it as the secret sauce that makes AI not just smart, but useful—like teaching a talented but scatterbrained friend how to focus on the task at hand.

By the end of this guide, you’ll not only grasp the basics but also learn advanced tricks, real-world applications, and even why some folks are whispering that this whole field might be on its way out. We’ll draw on the latest trends, backed by insights from industry leaders, to help you craft prompts that deliver precise, creative, and secure results. Whether you’re a marketer tweaking content generation or a developer building AI apps, mastering this could supercharge your workflow. Let’s jump in.

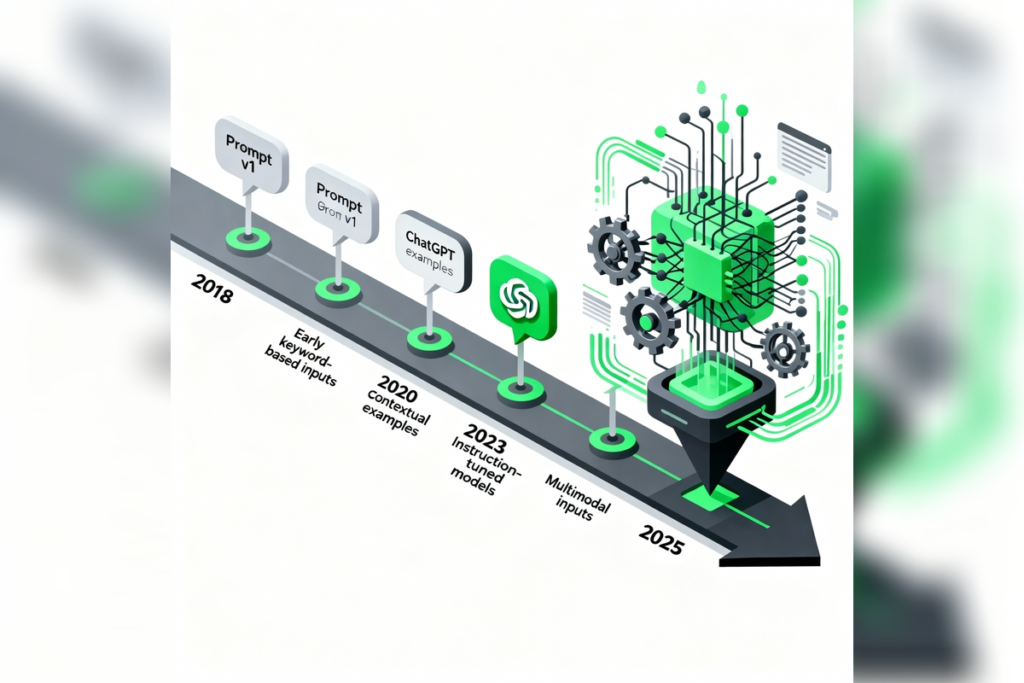

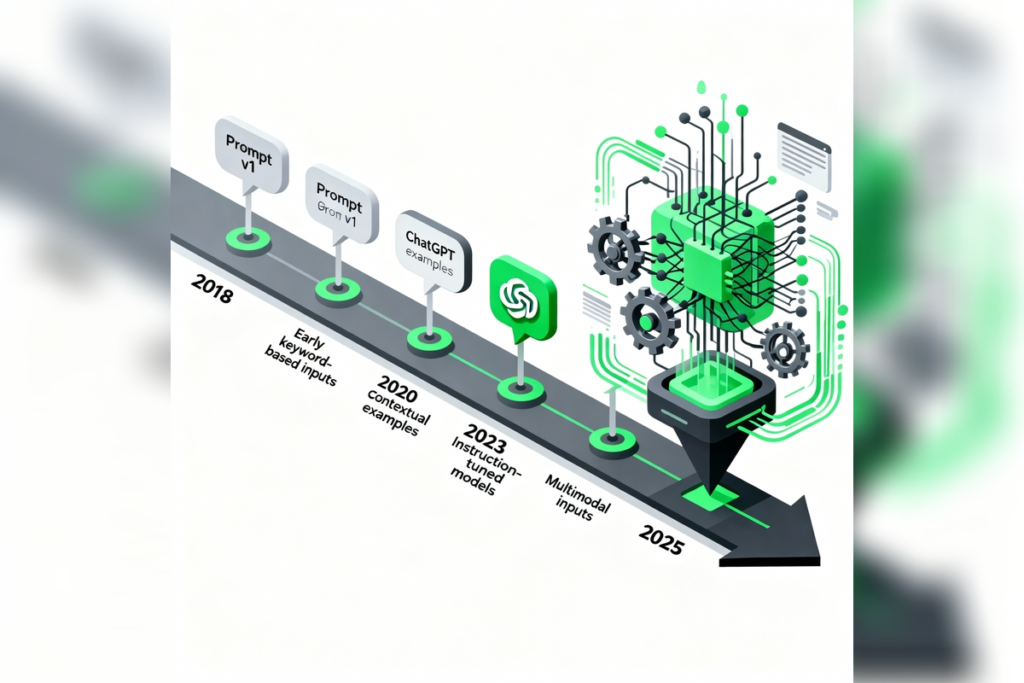

The Evolution of Prompt Engineering: From Niche Hack to Essential Skill

Prompt engineering didn’t just appear overnight with the hype around ChatGPT. Its roots trace back to around 2018, when researchers started experimenting with ways to frame natural language tasks as simple question-answering setups. Back then, AI models were clunky, and the idea was to squeeze more juice out of them without retraining the whole system. Fast forward to the early 2020s, and boom—the explosion of large language models (LLMs) like GPT-3 changed everything. Suddenly, everyone was realizing that the quality of your input (the prompt) could make or break the output.

By 2022, with ChatGPT’s launch, prompt engineering went mainstream. People were sharing thousands of public prompts online, from creative writing templates to code debugging aids. Google jumped in with chain-of-thought prompting, a technique that got models to “think” step by step, boosting accuracy on tough problems like math puzzles. Databases for text and image prompts popped up, and by 2023, multimodal AI—handling text, images, and even audio—made the field even richer.

But 2025? That’s where things get really interesting. According to recent analyses, we’re seeing a shift toward automation in prompt creation. Tools now use AI itself to generate better prompts, cutting down on manual trial-and-error. Mega-prompts—those long, detailed beasts that pack in context, examples, and instructions—are rising, especially with models like Gemini 2.5 that handle million-token contexts without breaking a sweat.1 Conversational AI integration is another big trend, where prompts evolve over multi-turn chats, building memory like a human conversation. And don’t get me started on generative AI for prompts: imagine feeding an LLM a vague idea and letting it spit out an optimized version. It’s meta, and it’s powerful.

Yet, there’s a cloud on the horizon. Some experts argue prompt engineering is fading as models get smarter at intuiting intent.2 Jobs titled “prompt engineer” are reportedly obsolete in many circles, with salaries that once hit six figures now redirecting to broader AI roles like context engineers or workflow architects.3 But is it really dead? Not quite—it’s evolving. In 2025, the focus is on hybrid approaches, blending prompts with retrieval systems and fine-tuning for enterprise-level reliability. This evolution underscores a key truth: prompt engineering isn’t static; it’s adapting to AI’s rapid growth.

Why Prompt Engineering Matters: Pros, Cons, and Real-World Impact

So, why bother with all this? In a world where AI tools are everywhere—from drafting emails to generating art—prompt engineering is your ticket to control. The pros are clear: it’s cost-effective. No need for expensive retraining; a well-crafted prompt can unlock 90% of a model’s potential right out of the box. It’s accessible too—anyone with a knack for words can do it, no PhD required. Plus, it enhances safety; thoughtful prompts can steer clear of biases or harmful outputs.

Take pros in action: In customer support, a simple role-based prompt like “Act as a friendly expert resolving billing issues” can turn a generic chatbot into a empathetic helper, reducing resolution time by up to 50% in some cases. Or in content creation, specificity prevents hallucinations—those made-up facts that plague uncrafted queries.

But let’s be real—it’s not all sunshine. The cons? Model dependency is huge. What works on GPT-4o might flop on Claude 4, leading to frustrating inconsistencies. It’s time-intensive; iterating prompts can feel like debugging code without a compiler. And in 2025, with adversarial attacks on the rise—think prompt injections that trick AI into spilling secrets—security risks loom large.4 Over-reliance on prompts might also stifle innovation, as folks tweak inputs instead of pushing model boundaries.

Despite these drawbacks, the impact is undeniable. In healthcare, prompts help triage patient queries with 91% accuracy, saving lives by prioritizing urgencies.4 In finance, retrieval-augmented prompts pull real-time data to avoid outdated advice. And for creators, it’s a game-changer: viral trends like “Draw My Life” animations rely on modular prompts to personalize outputs at scale. Bottom line? Prompt engineering bridges the gap between human intent and AI capability, making tech feel more intuitive and less like black magic.

Core Principles of Effective Prompt Engineering

At its heart, prompt engineering is about communication. You’re not just typing words; you’re directing an AI’s “thought” process. The first principle? Clarity. Vague prompts lead to vague results—it’s like asking a chef for “food” and getting a random salad. Instead, be specific: define the task, audience, tone, and format upfront.

Structure matters too. Use delimiters like triple quotes or sections (e.g., “### Context ### Task”) to organize info, especially in long prompts. This helps the model parse without confusion. Another key: context richness. Provide background to ground the AI, but compress it—2025 models thrive on efficiency, so trim fluff to save tokens and speed up responses.

Specificity ties into constraints. Want a bullet list? Say so. Need it under 100 words? Specify. This anchors outputs, reducing wild variations. Finally, iteration is non-negotiable. Test, tweak, and log what works. Tools like PromptEval can quantify sensitivity to changes, showing how a comma might swing accuracy by 40%.5 These principles aren’t rocket science, but applying them turns average AI interactions into precise tools.

Essential Prompt Engineering Techniques for 2025

Now, let’s get into the meat: techniques. We’ll start with basics and build to advanced ones, with tips tailored for today’s models.

Zero-Shot, One-Shot, and Few-Shot Prompting: Building from Scratch

Zero-shot is the no-frills approach: just instruct without examples. It’s great for simple tasks. Example: “Summarize the key risks of climate change in three sentences.” No demos needed, and it shines with robust 2025 models that “get” intent intuitively.

One-shot adds a single example to set the tone. For translation: “English: Hello → French: Bonjour. English: Goodbye → French:” Boom, the model fills in “Au revoir.” It’s quick for formatting consistency.

Few-shot ramps it up with 2-5 examples, teaching patterns without full training. In 2025, this is gold for classification: Show three complaint summaries labeled “high priority” or “low,” then classify a new one. Pro tip: Order matters—put the toughest example first to challenge the model.

These are foundational because they’re lightweight, but combine them for power.

Chain-of-Thought Prompting: Thinking Step by Step

Ever watch a kid solve a puzzle by talking it out? That’s chain-of-thought (CoT) in a nutshell. Instead of jumping to answers, prompt the AI to reason sequentially: “Solve this math problem step by step: If a train leaves at 60 mph…” It boosts accuracy on logic tasks by 20-50% in benchmarks like GSM8K.

In 2025, zero-shot CoT is hot—just add “Let’s think step by step.” For complex analysis, use tags: “<reasoning>Break it down</reasoning> <answer>Final output</answer>.” Claude 4 loves this for structured outputs. A real example: In cybersecurity, “Analyze this code vulnerability: First, identify the flaw, then explain impact, finally suggest fixes.” It uncovers nuances humans might miss.

Role-Based and Context-Rich Prompting: Assigning Personas

Assign a role to guide behavior: “You are a seasoned journalist writing for a tech blog. Explain quantum computing simply.” This sets tone and expertise, making outputs more engaging. In multi-turn chats, it builds continuity—like a virtual consultant remembering your style.

Layer in context for depth: “Given this market report [paste data], as a financial advisor, recommend investments for a retiree.” 2025’s long-context models like Gemini make this seamless, handling thousands of words without losing thread.

Advanced Techniques: Tree-of-Thoughts, RAG, and Meta Prompting

For the pros, tree-of-thoughts (ToT) explores branches of reasoning, like a decision tree: “Generate three possible solutions, evaluate each, then pick the best.” It’s ideal for planning, using beam search to prune bad paths.

Retrieval-augmented generation (RAG) pulls external data: “Based on this document [query database], answer…” GraphRAG adds knowledge graphs for interconnected insights, perfect for enterprise in 2025.5 It cuts hallucinations by grounding in facts.

Meta prompting? Use AI to craft prompts: “Optimize this vague query into a detailed one for code review.” It’s self-improving and a 2025 trend for automation.6 Self-consistency generates multiple outputs and votes on the best, enhancing reliability for ambiguous tasks.

These aren’t just tricks—they’re tools for tackling real complexity.

Best Practices and Tips for Mastering Prompt Engineering in 2025

Success boils down to practice. Start with A/B testing: Craft two versions, compare outputs, refine. Log everything in a notebook—patterns emerge fast.

Security is crucial now. Use scaffolding: “Evaluate if this request is safe; if not, decline.” Test against jailbreaks with tools like Gandalf.4 For efficiency, compress: “Summarize in 1) pros, 2) cons, 3) verdict.”

Model-specific tweaks: GPT-4o? Crisp and direct. Claude? XML tags. Gemini? Long contexts with hierarchies. And always iterate—2025’s automated optimizers like DSPy can help, but human oversight keeps it ethical.

Common pitfalls? Overloading prompts or ignoring token limits. Remember, less is often more.

Real-World Applications and Case Studies

Prompt engineering shines in action. In legal tech, context-rich prompts summarize contracts, slashing review time from hours to minutes. A Dropbox case study used guarded prompts to secure AI apps, preventing data leaks.4

Healthcare? Few-shot CoT prompts assess symptoms: “Based on these examples, rate urgency for fever and cough.” Accuracy jumps to 91%.4 Marketing teams use role-based mega-prompts for personalized campaigns, boosting engagement by 30%.

Even in gaming, ToT helps AI NPCs make dynamic choices. These cases show it’s not theory—it’s transforming industries.

The Future of Prompt Engineering: Evolving or Obsolete?

Is prompt engineering dead in 2025? Headlines say yes—models are so advanced, basic prompting feels redundant, and jobs are shifting to automated workflows.7 But others counter it’s morphing into context engineering, managing full ecosystems of data and agents.8 With trends like AI-generated prompts and integrated memory, it’s less about crafting and more about orchestrating.

My take? It’s not obsolete; it’s foundational. As AI embeds deeper into daily life, the ability to guide it precisely will remain key. Expect more focus on ethical prompting, multimodal integration, and defenses against exploits. The future is bright for those who adapt.

How to Get Started with Prompt Engineering Today

Ready to dive in? Grab a tool like ChatGPT or Claude, start simple: Zero-shot a summary, then add CoT. Build a portfolio—document prompts and results. Resources? Check OpenAI’s guide or Lakera’s 2025 tips.4 Join communities on Reddit for shared hacks.

Practice daily: Rewrite bad outputs into better prompts. Soon, you’ll engineer like a pro.

In wrapping up, prompt engineering is the bridge to AI’s full potential. It’s evolving, challenging, and endlessly rewarding. Whether for fun or fortune, honing this skill in 2025 will keep you ahead. What’s your first prompt going to be?

good website and good article you post and